VRS2S Virtual Reality and Speech to Speech Translation for Global Collaboration, a discussion from an Innovation Management perspective

Trabalho de Conclusão de Curso apresentado ao Curso

MBA em Gestão Estratégico de Inovação

Pós-Graduação lato sensu, Nível de Especialização

Programa FGV Management

Abril/2017

VRS2S Virtual Reality and Speech to Speech Translation for Global Collaboration, a discussion from an Innovation Management perspective

Por

FUNDAÇÃO GETULIO VARGAS

PROGRAMA FGV MANAGEMENT

CURSO GESTAO ESTRATEGICO DE INOVACAO

Virtual Reality and Speech to Speech Translation for Global Collaboration, a discussion from an Innovation Management perspective

Data: 08.04.2017

___________________________________

Ingrid Paola Stoekicht, D.Sc Coordenadora Acadêmica

___________________________________

Maria Candida Torres M.Sc – Orientador

This paper discusses the feasibility for the elaboration of an MVP (Minimum Viable Product) towards the development of a Virtual Reality (VR) virtual collaboration tool, with facial tracking and motion tracking, as well as speech to speech translation capabilities, towards encouraging global collaboration. The paper discusses this idea with a business justification for interest in the project, and a subsequent discussion of the technicalities of actually making the desired product, within the limits of VR technology.

Table of Contents

Stafford Beer’s ‘Team Syntegrity’ 5

Justification for developing the project 10

Entrepreneurialism and Ideation de-linked from place. 10

Technological Benefits of immersive VR.. 11

Justification: VR Market Size and Growth, trends and tendencies. 13

VR Market Dynamics Analysis. 13

Motion Capture Technology considerations. 16

Jobs to be done for the MVP. 17

- Create a shared ‘virtual collaborative environment’ 17

- Virtual avatar representation. 17

- Simultaneously translate more than one language at the same time. 18

Business intelligence research benchmarking. 18

Feasibility probing of virtual reality for collaborative meetings. 19

Benchmarking of Virtual Reality and collaborative meeting technology. 23

Doghead Simulation’s rummii 23

Benchmarking of Speech to Speech (S2S) Translation Technology. 25

Speech to Speech Benchmark Analysis. 26

Objective

The objective is to manage the creation of a new product which utilises technological advances to offer a new way of global social interaction. Specifically, the plan is to offer a platform which fosters global social interaction by transcending the barriers of location and language. The barrier of location is to be transcended by virtual reality technology (VR), so that people can meet in a virtual meeting room, using VR headsets (Head Mounted Displays HMDs), and supportive technology to make the meeting as lifelike as possible. Language is to be transcended by leveraging machine translation technology to the user’s earpiece while he is in the VR meeting environment.

This technology has the primary aim of becoming a platform for global entrepreneurship. It will enable projects to be carried out by small global teams, who pool their collective talents and resources, but who are currently unable to meet because of the boundaries of language and space. It enables project work and ideation processes. For this reason, this is an inherently disruptive form of innovation, as currently it is only a small business elite who can afford face to face, translated global meetings. To this end, this project aims to free up the world’s latent potential for entrepreneurship, by allowing the formation of groups whose strengths and abilities complement eachother, but who did not have a mechanism for meeting or understanding one another.

The secondary aim of the project is to be an enabling tool for international political collaboration. What I have in mind is the disruption of the United Nations, whose meetings are inefficient and logistically expensive. United Nations working groups are currently made up of different country representatives who must have expensive physical meetings in order to work together. The mechanism I am proposing would allow international working groups to virtually meet and work on a mutual project, without the inherently expensive logistics costs. In addition, by being radically cheaper than an international meeting with simultaneous translation services, this technology platform could democratise the discussion of global policy to a wider group of participants.

In fact, the technology is to be as open as possible, in that people can use the technology for what they want. There are obvious benefits for entrepreneurs for example, however the idea is that the platform facilitates global connections for anyone who is interested in making them.

Inspiration

The inspiration for this project is the ideas of Stafford Beer relating to ‘Team Sytegrity’ (Beer 1994) and ‘World Syntegration’ which refers to groups of citizens from around the world discussing solutions to common problems.

Towards World Syntegration

What is relevant as a raison d’etre of the current proposal is Stafford Beer’s vision for a World Syntegration process. This was Beer’s vision for the worldwide institution of Team Syntegrity processes as a new system for World Governance for the good of humanity:

There are people all over the world, sovereign individuals, who have ideas and purposes that they wish to share with others. They do not see themselves as bound by hierarchy (even to their own nation states) or committed to the processes (even those called democratic) that demand the establishment of political parties, dedicated movements, delegations – or indeed high- profile leadership. These people are the material of infosets: neighbourhood infosets of thirty local friends, global infosets of thirty world citizens.

This worldwide syntegration does not of course exist. It is a vision. But although visions may be inspirational, they do nothing much to alleviate suffering until inspiration is embodied in a plan of action. And if mounting human misery is the product of a triage machine as I have argued, and if the triage machine is endemic to the ruling world ideology so that it cannot be dismantled, then the action plan must circumvent triage altogether. The aim is ambitious: to start a process that invokes the redundancy of potential command as the methodology for a new system of world governance.

A group of thirty like-minded friends, sharing a purpose but living well apart, may share their intentions and formulate their actions by electronic means via the technosphere. I call thirty such friends a global infoset. (Beer 1992: 16)

World Syntegration 2.0

Beer laid out the vision for World Syntegration in 1992. Now, in 2017, fifteen years later, technology can be used to enable a truly global Syntegration process. I believe it is possible with Virtual Reality and translation technologies to enable 30 people, speaking a myriad of languages to meet together virtually in order to develop solutions to common problems.

I include this justification in order to explain why this current project resonates with my core values. However, it also serves to delineate the ‘jobs to be done’ which the technology in this project needs to offer:

- A virtual plenary for 30 people

- Virtual roundtable meetings for 5 people, who speak in 5 different languages.

Strategy

I have my personal reasons for interest in the technology, which I explained above, however the exact definition of how the technology will fit together is intentionally left vague, as this is to be developed together with collaborators as the project develops. In fact, this is part of the beauty of the proposal, that there are many ways in which the technology to be developed could be used.

I have taken the decision to focus on investigating and testing the feasibility of creating a product which is essentially a Virtual Reality ‘Head Mounted Display’ (henceforth HMD) ‘App’, which utilizes ‘Speech 2 Speech’ (henceforth S2S) translation software to enable people to chat remotely ‘Face to Face’.

Funding Strategy

The strategy which I am taking for the achievement of the materialisation of an MVP is to develop the central concept of the technology to be developed, in order to be able to attract collaborators, develop a project, and obtain finance established funding sources such as FAPERJ and FINEP in Brazil, external sources such as US Universities such as MIT or Stanford, in order to develop an MVP. ( você precisa definir tudo que é teoria) Once an MVP has been developed, I seek to attract funding from Venture Capital in order to scale the product for global usage.

Public Resources Strategy

The funding strategy is to initially assemble a team of experts, including a programmer, VR expert and innovation manager, and as a team write a proposal for funding from public resources such as FINEP[1] and FAPERJ[2] in Brazil, as well as seeking international funding sources, such as MIT’s Global Innovation Fund.[3]

Currently a partnership agreement is in place with fellow FGV Innovation Student and FGV Faculty Member, Albino Ribeiro Neto, who has published in scientific journals on the subject of Virtual Reality to pursue grants in order to develop a ‘Minimum Viable Product’.

The first steps towards being able to submit grant proposals are to delineate what aspects of the MVP that we seek to develop. Initial funding would be sought to buy relevant equipment, which include:

- Virtual Reality Head Mounted Display (HMD) Device + Developer Kit

- Motion Capture Sensor Technology

- PC Computer + Sufficiently powerful graphics card

In addition, resources are sought to pay and train Brazilian computer programmers to develop the code to develop the Application. At the current stage we are facing the question of identifying exactly how many programmers we need. Given the ambitious scope of this project, we could require a large team of programmers, to develop the following capabilities:

- Facial expression capture and subsequent transfer onto a VR Avatar

- Postural motion capture and subsequent transfer onto a VR Avatar

- Creation of a ‘Collaborative Virtual Space’

- Speech to Speech translation capability

It is strategically important to develop the focus areas of an MVP in order to be able to make a coherent proposal, delineated by what is technically possible. At the end of this initial phase, we seek to have established a Start Up company ready for potential support by Venture Capital.

Venture Capital Strategy (vc precisa de colocar o conceito de venture capital)

Once an MVP has been developed, a presentation of the prototype will be made to Venture Capitalists involved in supporting Start Ups during their initial phase. It would be interesting to seek incubation in a computer programming orientated environment in order to have access to programmers.

Potential supporters have been identified in FGV’s Jose Arnaldo Deutscher who has voiced interest in the endeavour, should we be able to build a prototype.

Potential venture capitalists investing in Virtual Reality have been identified, such as the ‘Virtual Reality Virtual Capital Alliance’[4] which has $17 billion of deployable funds available. In addition, I have identified a list compiled by Robert Scoble of 197 investors seeking to invest in Virtual Reality Start Ups.[5] In addition, there are websites offering lists of potential VR Venture Capitalists in annex which can also be used.[6]

In order to attract Venture Capital it is important that the product is ‘game-changing’

Justification for developing the project

Entrepreneurialism and Ideation de-linked from place (importante vc colocar antes qual a metodologia.. ou seja os passos..) vc está deixando claro os passos nos tópicos, mas acredito ser importante colocar… 1… 2.. 3… 4..

From an entrepreneurial perspective, the project offers a paradigm change, in that it would allow independent groups of entrepreneurs to form themselves, without barriers of language and place. If we consider all the different talents and abilities of humanity as a whole, on aggregate, we could form so much more synergetic teams than is currently possible, where people need to speak the same language, or physically share the same space. This is especially true for creative processes, where teams need to conduct ideation processes together. With this product that I have in mind, truly immersive VR experiences would allow teams to conduct ideation processes together, without sharing the same place or language.

The benefits of such a technology can be seen from a cost-cutting perspective, as global talent could be accessed, a Chinese engineer who speaks English has one price, the same engineer who does not speak English is less costly. The same is true for the rest of the global talent pool. With such technology the most cost-efficient computer programmer could be hired, as well as the most cost-efficient designer.

This model of employment fits with current trends of globalisation and employment. Traditional 20th century forms of employment have been eroded by outsourcing and automation. It has been recognised that the 21st century worker needs to be entrepreneurial, highly networked, and have diverse revenue streams in order to simply survive in the current climate. A mechanism for allowing groups of people to collaborate and ‘meet’ eachother virtually would allow for an exponential rise in the possibilities of synergies between groups.

Technological Benefits of immersive VR

The benefit of using virtual reality for meetings is that it is immersive, and gives users the opportunity to see eachother’s body language and facial expressions. Its benefits have been captured in the following phrase:

VR is an immersive, multisensory experience, creating the illusion of participating in a synthetic environment rather than being an external observer of such an environment. (Gigante 1993)

Currently video-conferencing technology such as Skype or CISCO Videoconferencing[7] is used for multi-location group meetings, however these mediums use flat screen video technology. The key difference is the level of immersion that virtual reality offers over traditional video-conferencing.

Traditional videoconferencing and eLearning systems like Skype and Vidyo lack naturalness and are not very immersive. They require users to adopt un-natural mechanisms through keyboard and mouse, resulting in interruptions to the communication flow and thought process. (Guntha 2016)

A disruptive innovation

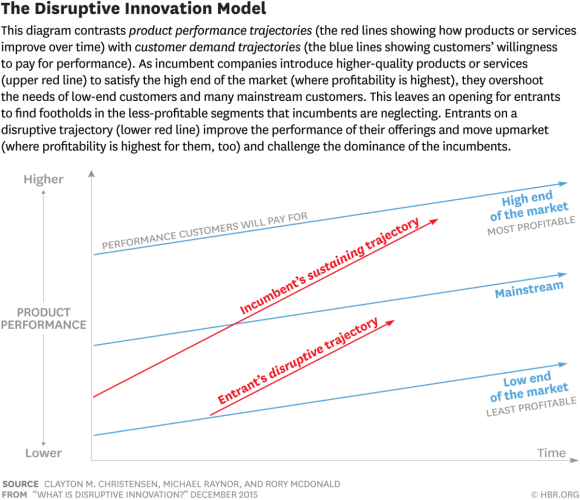

The proposal which I am outlining is potentially ‘game-changing’, it is essentially a disruptive innovation, disrupting the necessity for face to face meetings to be conducted in the same place. Should this technology develop the it would cause a disruption to the entire business travel industry. I am calling it disruptive, following Christensen’s discussion of disruptive innovation (Christensen 2015). If we think of the business travel industry, to be able to have an ‘almost as good’ meeting without travelling anywhere is potentially disruptive. It is also disruptive in the sense that this technology is not aimed at current business travellers, but rather towards potential global entrepreneurs, who would like to have international meetings in order to establish trust based personal business networks, and business endeavours, but do not have the resources to pay expensive business travel costs, or have the language pre-requisites to communicate with people who do not share the same language. It is recognised that a virtual reality meeting with speech to speech translation will be inferior to face to face meetings, it will be vastly inferior, however this allows it to appeal to a different sort of client, the client excluded from the current business travel consumer market. In this way it represents a ‘Low-end Foothold’. Christensen explains that incumbents leave the door open to disrupters with a ‘good enough’ product for ‘low end customers’ excluded from the incumbents focus customer base. In this project the costs are minimal compared to airline travel and hotel logistics.

Another factor is the speed with which VR and S2S technology is developing, for Christensen this is a positive factor: “We’ve come to realize that the steepness of any disruptive trajectory is a function of how quickly the enabling technology improves.” (2015).

This project also fits with Mauborgne’s (2014) ‘Blue Ocean Strategy’, (importante referenciar) in that it seeks to establish a completely new customer market; it will enable a vast number of people to have meetings with eachother, whether for entrepreneurial, leisure, or political reasons. This is also something that Christensen makes reference to, that a disruption is something that customers are not necessarily aware of that they need.

VRS2S Moonshot Idea

Google X defines itself as:

“a moonshot factory. Our mission is to invent and launch “moonshot” technologies that we hope could someday make the world a radically better place.”[8] (Google 2017)

The VRS2S project has the aim of following Google X’s logic, to create something useful for humanity, which radically changes the problem that the world is divided into peoples who speak different languages, and a prevented from meeting eachother because of travel limitations, using the breakthrough technologies of VR and S2S translation to achieve it.

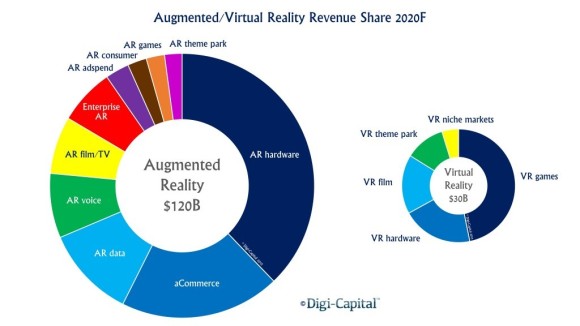

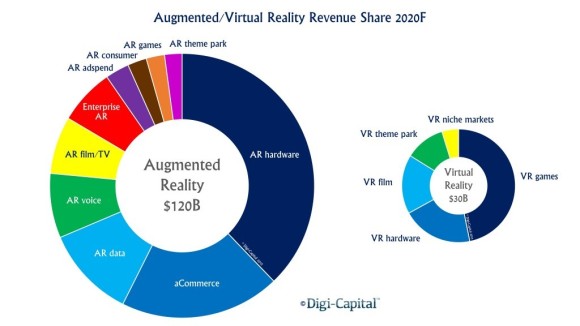

Justification: VR Market Size and Growth, trends and tendencies

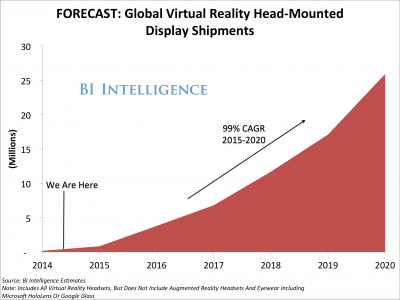

VR industry analysts Digi-Capital forecast that the VR market could hit $150 billion by 2020, $120 billion for augmented reality, and still $30 billion for VR. (Digi-Capital 2015)[10] Of this $30 billion, around 20% is expected to go to VR niche markets. In 2016, revenue from VR was $1.8 billion, with a total of 6.3 million devices shipped.

By 2030, Ray Kurzweil predicts that communication technology will be so advanced that “two people could be hundreds of miles apart but feel like they are in the same room and even be able to touch one another.” (State of the Future 2017)[12]

From this brief discussion, we can see that VR technology is a market with very healthy growth indicators.

Limitations of language

The following table shows the number of people connected to the internernet, and I include it in order to remind us of the fact that while we are connected to millions of people via the internet, it is still segmented by language divisions. People from different regions do not interact as much as they could do, because of the lack of a shared language. The table, using data from Internet Live Stats’ 2013 data shows that almost half of the internet users are in Asia, more than in Europe and the Americas put together. A technology which aims to make ways of connecting these billions of currently unconnected people is aiming for a large market.

VR Market Dynamics Analysis

An analysis of the VR market shows some complications that we must be aware of, which I have come across in the research for this project. Currently VR via HMD is a competitive market in terms of technology. Market leaders are HTC’s Vive Platform, Facebook backed Oculus Rift, Playstation’s VR platform, as well as Samsung’s mobile phone platform, Samsung Gear.

However, the HMDs are still in initial stages, and are still relatively expensive, there are also few quality applications and games because of the cost/benefit ratio of developing for each platform. There is a ‘Catch 22’ situation in the VR market, which is that the market share for each device is still too low to attract developers, as too few headsets are in the hands of consumers. This technology is still very much in an ‘early adopter’ phase. Eric Romo, CEOP of Altspace VR explains that:

…current headsets are pretty good, but “they all have tragic flaws…. Desktop VR is too complicated, and console VR monopolizes your living room, while mobile VR isn’t full-featured enough” (Robertson 2017) [14]

However, the sale of VR HMDs is expected to grow rapidly over the next few years according to Business Insider (see image below). The AMD VR Advisory Council also expects the hardware market to grow to a $2.8 billion hardware market by 2020.[15]

VR HMD Device comparison

A challenge for current HMDs is that they currently need a wire connection to a PC in order to be able to process the amount of data required, WIFI transmission cannot transfer enough data:

The problem lies in the enormous amount of data that is required to drive the VR display. And as VR content increasingly becomes high-definition, the problem only gets worse. To achieve smooth action with low latency, any wireless system for virtual reality must have transfer rates exceeding 4Gbps, and preferably exceeding 5.2Gbps. With the current 802.11ac wifi standard capable of a maximum 1.3 Gbps, you can see the proble. (Levski 2017)

However, hardware developers are working to develop ‘standalone’ VR devices and find solutions to these processing power requirements. Elsewhere, in April 2017 the first ‘hologram telephone call’ occurred, facilitated by a 5G network, allowing connection speeds up to 100 times faster than existing networks. It is expected that such 5G networks become implemented by 2020. (Trusted Reviews: 2017)[20]

Motion Capture Technology considerations

The Oculus Rift offers motion technology capture through a Microsoft Kinect system while the HTC Vive includes motion capture technology as part of its package. In terms of the scope of the current project, the HTC Vive has the best equipped motion capture technology. The Samsung Gear platform is the most appealing in terms of potential market, because the platform is more accessible, as it is a cheap accessory to an established mobile phone. However, the processing power of the mobile phone option excludes it from our analysis.

In terms of an MVP, the most basic value proposition must be demonstrated as a prototype. Following proof of concept, new hardware options can be chosen as they appear on the market. HMDs are still changing very quickly, and the trend is that their computational capacities will increase, and their prices to drop.

Towards an MVP

The working title for this project is VR+S2S (Virtual Reality plus Speech2speech), and I seek to build an application for a HMD device. The product is essentially a ‘virtual collaborative environment’, ideally for up to five people in five different locations, speaking five different languages, who are each represented by an avatar of themselves.

The overall goal of the vision of this project is to create an overall platform for collaboration, where the problems of place and language spoken for human meetings are reduced by using the technologies of VR and S2S translation.

There are all sorts of questions that emerge regarding such an ambitious project. Some questions are essential which I will list briefly here, but that are beyond the scope of the MVP. For example, it is recognised that this project requires the creation of a directory of users, somehow in the mould of a ‘LinkedIn’ or social-media platform, which automatically translates all ‘Search Terms’, and all Search Engine ‘Results’. This part needs to be developed to address the question of how users can find eachother.

The strategy that I have chosen is to focus on the essential core idea of the project, following insight from Eric Ries’ ‘The Lean Start Up’ (2011) and to work as fast as possible to test the feasibility of the core assumption that it is possible to create a VR collaborative space, with S2S capabilities. Answering questions related to feasibility led me to research what the state of the art in collaborative meeting platforms is, and what possibility there is for developing a VR S2S ‘Minimum Viable Product’. This research has been essential in order to be able to calculate what sort of product can actually be offered.

Jobs to be done for the MVP

The jobs to be done by this technology are the following:

1. Create a shared ‘virtual collaborative environment’

A shared virtual environment within which the 5 people can see eachother. Initially this is to be done as simply as possible, a simple room with a table and five chairs. This step require requires building a simple virtual environment, which is accessible via an internet connection. In order for participants to enter into this virtual space, they may utilise an HMD device, such as an Oculus Rift.

Essentially this is a simple virtual reality programming task.

2. Virtual avatar representation

The aim is to have virtual face to face meeting, and this requires that the users’ appearance, body position and facial expression to be mapped in real time onto the user’s avatar in the meeting room.

There are essentially two jobs to be done in this section, firstly to accurately display facial expression, and secondly to accurately display postural information in the virtual environment.

Current limits to technology limit the realisation of this step of the project, because of the graphics rendering implications. It is currently not feasible for facial recognition technology provide a realistic rendering of an individual’s face and movement into a 3D virtual reality setting, via internet connection, especially for five people.

Postural recognition can be achieved using a Microsoft Kinect device, or ASUS’ Xtion Pro Live device, or HTC Vive’s Motion Controllers.

Another question arises here which is how to run a facial mapping algorithm if the user is wearing an HMD? This question has been answered by a team of researchers around the University of Southern California, led by Hao Li, who developed a facial recognition algorithm to determine facial expression underneath an HMD headset.[21] In this experiment, a modified HMD, with a camera attached to it looking at the user’s face is used to capture the user’s expression.

A question that I seek to answer is what is the level of realism necessary for a face to face meeting? I feel that a rendering of basic body language and head position, as well as facial expression are important aspects. However, the realism of the users’ avatars may not necessarily need to be cinematographic. Firstly, VR technology is not able to render real time face and posture into a 3-D environment, however a simplified avatar that maps basic expression and posture may be sufficient, especially for an MVP. A factor here is something called ‘the uncanny valley effect’[22], this refers to the observation that people feel more uncomfortable in very realistic VR environments, with lifelike avatars, than with avatars that are intentionally less real. This hypothesis suggests that if our technology cannot offer very realistic rendering, this is not necessarily of paramount importance.

3. Simultaneously translate more than one language at the same time

While virtual reality meeting software exists, none that I observed offered the capacity to offer simultaneous translation. The MVP needs to deliver a translation service, which works as fast as possible.

Business intelligence research benchmarking

I have conducted extensive research into the state of the art of virtual reality technology and machine translation technology, in order to determine the technological possibilities towards the realisation of this project.

The business case for investment in a virtual face to face meeting technology, with simultaneous speech translation is the following: The solution proposed is a necessity because of growing tendencies towards outsourcing and offshore work. While offshore outsourcing makes processes cheaper, it has also made communication and collaboration more difficult. There is a demand for technologies which enable cross-platform collaboration, and it has been acknowledged that face to face meetings are very important for team cohesion.

“Successful collaboration and team performance depends, to some extent, on the socialisation of the dispersed team members.” (OSHRI 2007: 26)

There are all sorts of other opportunities that such technology could offer, it is suitable for international board-room meetings, working group session of international organisations, and multi-location group project work.

Feasibility probing of virtual reality for collaborative meetings

The idea that I have for immersive virtual reality meetings, with simultaneous translation is fascinating, however, it is in the realm of ‘Product Design’, ideally now I would have a conversation with a ‘Product Engineer’ to fit the design to the limits of current technology.

I have conducted research into what is possible with current virtual reality, that is relevant to this project, and there are a series of questions which I have needed to answer in order to ground this product in reality.

Essentially, I am talking about a product that uses a Head Mounted Display [HMD], and earphones for sound transmission. The HMD allows the user to enter into and experience virtual reality, seeing other people there, and being able to interact with them. The translation aspect is relatively simple as this can be done through an earpiece and microphone. The design of the 3-D environment itself is also relatively simple.

What is also essential is a camera which can capture my movement and my facial expression. Given the limits of technology, it is not possible for the camera on my computer to capture my movement and my facial expression. There are many problems relating to this, such as the following:

In order to capture my positioning to accurately represent my body positioning in VR, five cameras would be needed, in five quadrants around me, so that if I was sitting in a virtual roundtable meeting, the sides of my face would be accurately displayed, people sitting next to me would also require a different image than those sitting opposite me.

Microsoft Kinect Motion Capture

For posture, the issue can be addressed through the use of the Microsoft ‘Kinetic’ movement tracker for use on gaming platforms, although this gives a ‘flat’ image capture, which is ok for a teacher at the front of the class, but not suitable for a roundtable meeting.

Live skeletal tracking can be rendered live onto an avatar in a virtual environment using depth imaging sensors, such as a Microsoft Kinect device, or ASUS’ Xtion live technology. Microsoft’s Kinect device is currently used in videogames and has the capacity to track skeletal movements within the Kinect’s field of view.

The Kinect technology has been used to demonstrate that one person could assist another through VR by mapping. For example, Tecchia (2012) conducted an experiment to demonstrate the possibility of collaboration in hand gestures using a Kinect camera to map hand position.

Kinect technology has also been used by researchers in India to project realtime 3D images of people into the same space, towards the end of making eLearning more realistic.[23] The Kinect device captures the users image and displays it onscreen, in a shared virtual space with another Kinect connected user in a separate physical space. (Guntha 2017). However, according to personal communication with the leader of this project Ramesh Guntha in 2017, this technology works best for classroom environments. The dynamics for capturing a roundtable meeting are much more complicated, a set up with at least three cameras is needed, in order to capture the occluded angles of each participant (Guntha 2016) however this set up leads to a much more immersive experience than the one camera variation. For a roundtable meeting, a work around could be the use of multiple cameras to capture the different sides of the individual, in order to have sufficient information to make a virtual reality of the user’s position.

‘Real-time remote collaboration’ between teams in different countries has also been achieved using Virtual Reality and Kinect for the manufacturing industry. Oyekan (2017) describes the creation of an infrastructure for remote collaboration for automobile assembly using depth imaging sensors (Kinect) and a synchronous data transfer protocol. In an experiment, a team of engineers in the UK and a team in India were able to collaborate on the same physical task of fixing a roof rack to a car. In this experiment, the Kinect device was used to render the user’s correct posture into a 3D environment as an avatar.

IKinema’s Project Orion

The most interesting motion capture example comes fromspecialist company IKinema who developed a low cost motion capture system using the HTC Vive platform. In this experiment, three motion capture trackers and 3 standard controllers are used. The users data is transferred very accurately to a virtual environment. (James 2017)[24] A demonstration of the project is available on a Youtube video.[25] The level of realism provided by IKinema demonstrates a proof of concept for cost efficient motion capture. The tracking technology used by HTC Vive is also open sourced, which facilitates development on this platform.

Using a VR headset such as the HTC Vive, motion controllers and just three new Vive Trackers, Orion can be used as a high-quality, flexible and accessible motion-capture system. The same approach can be harnessed to provide virtual reality body tracking, meaning a VR user can have their movements captured and reproduced while they are within a given experience, taking immersion to new levels. (IKinema Orion website)[26]

IKinema Orion is sold as a licensable technology (for $500 per year), so it could be easily incorporated into this project.

Facial Expression

It is understood that facial expression is important to make the meeting as realistic as possible, however if users are wearing bulky HMDs, we cannot see their faces, so how can we accurately represent their facial expression?

This issue is somewhat answered by a team of researchers led by Hao Li from Stanford University, who have developed a deep learning algorithm for facial expression, based on signals from muscular movements of the lower face and their correlation to the part of the face obscured by the HMD to calculate what expression an individual is making.(Olsewksi 2016). This teams innovative project recognises the following:

While readily available body motion capture and hand tracking technologies allow users to navigate and interact in a virtual environment, there is no practical solution for accurate facial performance-sensing through a VR HMD, as more than 60% of a typical face is occluded by the device. Li and coworkers have recently demonstrated the first prototype VR HMD [Li et al. 2015] with integrated RGB-D and strain sensors, that can capture facial expressions with comparable quality to cutting edge optical real-time facial animation systems. However, it requires a tedious calibration process for each session, and the visual quality of lip motions during speech is insufficient for virtual face-to-face communication.

Our objective is to enable natural face-to-face conversations in an immersive setting by animating highly accurate lip and eye motions for a digital avatar controlled by a user wearing a VR HMD (Olsewski 2016:220)

While the team’s work is still a research project the algorithm that they are using could be very useful in enabling facial recognition for HMD wearers.

Feasibility challenges that need to be researched further include the computational requirements for rendering live video capture onto a 3D avatar. Whether the amount of information necessary is too much for a client / server connection. Fukuda (2006) writes about the experience of collaborators on an architectural project meeting virtually and walking together through the site of the proposed architectural project. He explains that this is possible using ‘Cloud VR’ technology, although a PC computer with a high spec GPU (Graphics Processing Unit) is necessary.

- Perhaps realism is not the most important factor, and therefore the problem of 3 above is not necessarily so significant. VR research shows that ‘realness’ is not necessarily that important a factor for interaction in a virtual space. According to the ‘Uncanny Valley’ theory, very human-like characters can evoke feelings of unease in a virtual environment (Stein 2017). Given the rendering difficulties of perfectly rendering an accurate representation of the individual into a 3D environment, it is interesting that it is perhaps not of paramount importance for an avatar in a virtual environment to be as lifelike as possible. An avenue of research to test is the possibility of accurately rendering facial expressions, head position and posture, as well as voice onto a cartoon like avatar, and see if this form of communication would be feasible for users.

Benchmarking of Virtual Reality and collaborative meeting technology

Mindmaze

A neuroscience and computing company called Mindmaze has developed a mask with sensors to be placed under an HMD, which can map expressions and transfer them to a VR avatar. This product is in the development phase, and the company is developing the technology to be used with existing HMD manufacturers, as sensors could be placed on existing devices. Figures 8 and 9 below show the Mindmaze sensors on the inside of an HMD device, and shows how the user expression can be mapped to an avatar in virtual reality.

Figure 8: Facial expression translation onto VR avatar using sensors on the device.[1]

Figure 9: Mindmaze Sensors HMD[1]

[1] Mindmaze sensors, source: www.mindmaze.com

[1] Figura de: www.mindmaze.com

3DiO

I have identified similar meeting technology using virtual reality, one example is developed out of Stanford University’s Centre for Integrated Facility Engineering (CIFE). According to a media article[28], Stanford’s CIFE is developing a ‘virtual meeting place solution’ in the form of a ‘virtual immersive environment’, the article explains that the project, known as 3DIO is in the early stages of development:

In a world where global teams are increasingly prevalent, the researchers at Stanford CIFE have created a virtual meeting place solution, sure to be a breakthrough in the AEC industry. 3DiO is a virtual immersive environment, drawing from the idea of an Obeya, the Japanese term for a big room or war room. Imagine WebEx meets Second Life and you have 3DiO – a refreshing change for anyone who has sat in an excruciating long, mismanaged, and boring BIM coordination meeting. This technology is still in the early creation stages, but anything that comes out of Stanford CIFE is worth keeping an eye on. (Techlink 2017).[29]

However, the only information on this project is that a 20 minute speech was presented in Stanford in 2015[30], but nothing more. It does demonstrate that the cutting edge institutions are dedicating resources to this application of VR technology.[31]

Doghead Simulation’s rummii

A technology that has made it to market is provided by Doghead Simulations[32]. This company offers a VR solution called “rumii” for collaboration between a company’s team members, in order to conduct Agile Planning, Sprint Development and Project Management. Rummii is run on a subscription based cloud VR service.

In addition, Cisco systems is a market leader in telepresence videoconferencing on the 2D medium.

Virtual Social Spaces

There are a few social platforms which use the VR medium, such as VRChat[33], where one can create worlds and interact with other users. VR Chat attracted $1.2 million in seed funding in 2016. Altspace VR[34] is a social space designed to bring VR users together in virtual space. Bigscreen VR, started with $3 million funding from Andreesen Horowitz offers among other things, the possibility to “collaborate in virtual rooms with your co-workers[35]”

Benchmarking assessment

Looking at what Doghead and Bigscreen offer, we can see that the User Experience is rather limited as the screenshots from their applications show:

Doghead’s ruumii:

Bigscreen VR:

It is clear from the images that there is no motion tracking or accurate representation of the user. This gives encouragement to the idea that the project which I am seeking to develop is original.

Benchmarking of Speech to Speech (S2S) Translation Technology

Translation technology is advancing quickly, and large tech companies such as Google and Microsoft (Skype) have invested in developing this technology. Google Translate and Skype Translator are leaders in the field.

Skype Translator

Skype Translator is a S2S translation mechanism using Machine Translation and Automated Speech Recognition. However, the technology is still being perfected: “We have some ways to go to achieve fully seamless, real-time spoken translation.” (Lewis 2015). In April 2017, Skype began to offer instantaneous Japanese speech translation.[38]

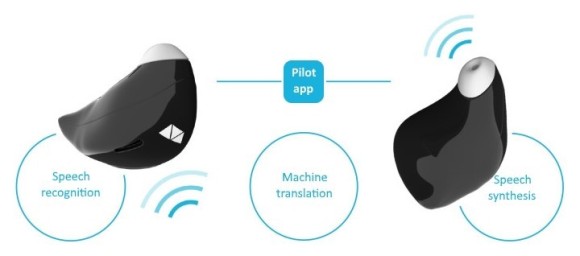

Waverly Labs’ Pilot

Waverly Labs have develped a S2S earpiece kit, which works using a mobile phone application and two earpieces, it will be released commercially in September 2017. The development of this product demonstrates proof of concept for Speech to Speech translation technology.

Speech to Speech Benchmark Analysis

The S2S interface is not new in computing, and according to programmers I have talked to, is not difficult to offer this capacity in an application. There are open source programming tools available such as Uima, Galaxy and Open Agent. (Aminzadeh 2009)

In terms of commercially available S2S applications, the upcoming Waverly Labs pilot and Skype offer the most advanced solutions.

Conclusion: MVP Definition

Facial expression and body position tracking

As we have now probed the technological limits and existing offerings on the market, a realistic MVP can be envisaged.

The MVP will utilise the insight from two key resources, IKinema’s Project Orion and Olszewski’s (2016) research into facial mapping. Project Orion demonstrated that it is possible to simply and accurately map body position into VR, and Olszweksi demonstrated that it is possible to

The HMD we will use will be an HTC Vive Module, and we will require a license for Vive’s Lighthouse Tracking technology. The Vive will need to be adapted with a front facing camera to record the user’s facial expressions, following Olszewski’s et all’s research.

If our MVP is able to demonstrate facial expression and body position mapping into a VR environment, this would already be a significant step.

The next steps regarding this project include:

- Further feasabilty testing into replicating Olszewski et al’s research, and the experiments conducted by IKinema’s Project Orion.

- Entering into a collaborative relationship with IKinema and Olszewski’s team, using the principles of Open Innovation. Given that IKinema’s software is licensable for $500 dollars a year, this facilitates the process.

MVP and Open Innovation

For the MVP, and given technological limitations, it will be sufficient to have a humanlike representation of the user, what I feel is more important is a correct postural representation and basic facial expression. If we can obtain access to Olszewski’s facial recognition algorithm we can look for some rudimentary representations of basic emotions, which would still give us an interesting product, to be further developed. Ideally, an open innovation relationship, obtaining licenses to use their technology would be ideal. In terms of innovation management it makes more sense to engage Olszewski’s research team and IKinema’s Project Orion, working collaboratively to develop a marketable product.

In terms of the use of technology, given the rapid speed with which VR hardware is developing, the MVP needs to demonstrate concept, as the technical specifications will change as things advance over the next few years.

Lessons Learned

The lessons learned from this exercise include: A recognition of the high level of complexity involved in a technological innovation project. The VR industry has many actors, however each one is operating in an entrepreneurial capacity, in a state flux with no guarantees that technology will not be superceeded by someone else’s. The rapid rate of technological developments also means that an innovation project in this area must look ahead to the developments that are likely to be made in the near future, and plan accordingly.

Another question of complexity is the question of selling the product and marketing. In this paper I have looked at the technological feasibility of making an MVP for this project, because I had the necessity of verifying whether the idea for the project was technologically feasible or not. It is recognised that the idea could be of interest to a great number of people, however this number of people needs to be divided by the total amount of people who have access to an HMD, given the current pricetag the project manager needs to be aware that the costs of consumer HMDs must be reduced in order for the product to be mass marketed. However, if the project is able to demonstrate facial recognition and movement tracking, a partnership could be established with entrepreneurs with marketing ability to better market the project. I have considered the User Experience of the technology for this project, but as the technology is so new, we need to check to see if it works before being able to test it with potential consumers.

This project needs a slightly longer timeframe than the immediate future. A prototype needs to be built, with a team that has very specific mathematical and programming knowledge, once the prototype is built, the way that the project can be sold can be discussed, however is discussion coul be discussed at the venture capital phase.

References

AMINZADEH, A. Ryan; SHEN, Wade. Advocate: A Distributed Architecture for Speech-to-Speech Translation. LINCOLN LABORATORY JOURNAL, v. 18, n. 1, 2009.

BEER, Stafford. World in torment: A time whose idea must come. Kybernetes, 2013.

BEER, Stafford,Beyond Dispute: The Invention of Team Syntegrity, 1994, Chichester: Wiley

CHRISTENSEN, Clayton M.; RAYNOR, Michael E.; MCDONALD, Rory. Disruptive innovation. Harvard Business Review, v. 93, n. 12, p. 44-53, 2015.

FUKUDA, Tomohiro et al. Development of the environmental design tool “Tablet MR” on-site by mobile mixed reality technology. Proceedings of The 24th eCAADe (Education and Research in Computer Aided Architectural Design in Europe), p. 84-87, 2006.

GIGANTE M, Virtual reality: definitions, history and applications. In: Earnshaw RA, Gigante MA,

GUNTHA, Ramesh; HARIHARAN, Balaji; RANGAN, P. Venkat. CMAC: Collaborative multi agent computing for multi perspective multi screen immersive e-learning system. In: Advances in Computing, Communications and Informatics (ICACCI), 2016 International Conference on. IEEE, 2016. p. 1212-1218.

GUNTHA, Ramesh; HARIHARAN, Balaji; VENKAT RANGAN, P. WWoW: World Without Walls Immersive Mixed Reality with Virtual Co-location, Natural Interactions, and Remote Collaboration. In: Intelligent Technologies for Interactive Entertainment: 8th International Conference, INTETAIN 2016, Utrecht, The Netherlands, June 28–30, 2016, Revised Selected Papers. Springer International Publishing, 2017. p. 209-219.

KIM, W. Chan; E MAUBORGNE, Ren. Blue ocean strategy, expanded edition: How to create uncontested market space and make the competition irrelevant. Harvard business review Press, 2014.

LEWIS, William D. Skype translator: Breaking down language and hearing barriers. Translating and the Computer (TC37), 2015.

OLSZEWSKI, Kyle et al. High-fidelity facial and speech animation for VR HMDs. ACM Transactions on Graphics (TOG), v. 35, n. 6, p. 221, 2016.

OSHRI, Ilan; KOTLARSKY, Julia; WILLCOCKS, Leslie P. Global software development: Exploring socialization and face-to-face meetings in distributed strategic projects. The Journal of Strategic Information Systems, v. 16, n. 1, p. 25-49, 2007.

OYEKAN, John et al. Remote real-time collaboration through synchronous exchange of digitised human–workpiece interactions. Future Generation Computer Systems, v. 67, p. 83-93, 2017.

RIES, Eric, The Lean Start Up, 2011, Uk: Penguin

STEIN, Jan-Philipp; OHLER, Peter. Venturing into the uncanny valley of mind—The influence of mind attribution on the acceptance of human-like characters in a virtual reality setting. Cognition, v. 160, p. 43-50, 2017.

TECCHIA, Franco; ALEM, Leila; HUANG, Weidong. 3D helping hands: a gesture based MR system for remote collaboration. In: Proceedings of the 11th ACM SIGGRAPH International Conference on Virtual-Reality Continuum and its Applications in Industry. ACM, 2012. p. 323-328.

Websites and Web articles

50 Virtual Reality Technologies in architecture and engineering, ViaTechnik,, 2016, available at: https://www.viatechnik.com/resources/50-virtual-reality-technologies-in-architecture-engineering-and-construction/ accessed April, 2017

Altvr.com, available at www.altvr.com accessed April 2017

Amazon, available at www.amazon.com accessed April 2017

BigScreenVR, available at www.bigscreenvr.com accessed April 2017

Doghead Simulations, available at http://www.dogheadsimulations.com

Digi-Capital, Augmented/Virtual Reality to hit $150 billion disrupting mobile by 2020, April 2015, available at http://www.digi-capital.com/news/2015/04/augmentedvirtual-reality-to-hit-150-billion-disrupting-mobile-by-2020/#.WOj_-Pnyvic accessed April, 2017

Faperj, available at www.Faperj.br accessed April, 2017

Finep, available at www.finep.gov.br accessed April, 2017

Google, 2017, available at https://x.company/about accessed April, 2017

HERNANDEZ, Pedro, Skype Translator Now Works With Japanese for Video and Voice Calls, eWeek, April 2017, available at: http://www.eweek.com/cloud/skype-translator-now-works-with-japanese-for-video-and-voice-calls accessed April, 2017

IKinema project Orion, full body mocap from 6 HTC-Vive Trackers, Feb 20167, Youtube, available at: https://www.youtube.com/watch?v=Khoer5DpQkE accessed April, 2017

Internet Live Stats, available at: http://www.internetlivestats.com/internet-users/ accessed 8 April 2017

JAMES, Paul, Tech Industry Heavyweights Debate the Future of VR at AMD ‘VR Advisory Council’, July 2015, Road to VR, available at http://www.roadtovr.com/tech-industry-heavyweights-debate-the-future-of-vr-at-amd-vr-advisory-council/ accessed April, 2017

JAMES, Paul, Watch: IKinema’s HTC Vive Powered Full Body Motion Capture System is Impressive, Feb 2017, Road to VR, available at: http://www.roadtovr.com/IKinema-project-orion-shows-impressive-motion-capture-just-6-htc-vive-trackers/ accessed April, 2017

IKinema Orion, available at: https://IKinema.com/orion accessed April 2017

LEVSKI, Yariv, Cutting the Cord: Virtual Reality Goes Wireless, App Real, 2016, available at: https://appreal-vr.com/blog/virtual-reality-goes-wireless/ accessed April, 2017

MIT Global Innovation Fund, available at http://www.globalinnovation.fund/ accessed April, 2017

ROBERTSON, Adi, On the Vive’s first birthday, the VR conversation is getting calmer, The Verge, April 2017, available at: http://www.theverge.com/2017/4/5/15191326/htc-vive-anniversary-state-of-vr accessed April, 2017

Stanford University Techincal Advsory Committee 2015, available at https://cife.stanford.edu/TAC2015

The State of the Future, Dubai Future Academy, Issue 1, available at: http://www.stateofthefuture.ae/res/DFF-DFA-StateoftheFuture-EN.pdf accessed April 2017

Trusted Reviews, The world’s first 5G hologram call just happened and the future is officially here, April 2017, available at http://www.trustedreviews.com/news/the-world-s-first-5g-hologram-call-just-happened-and-the-future-is-officially-here accessed April, 2017

VR Chat, available at www.vrchat.net accessed April 2017

Waverly Labs home page, available at: http://www.waverlylabs.com/pilot-translation-kit/ accessed April 2017

[3] http://www.globalinnovation.fund/

[4] http://www.vrvca.com/overview

[5] https://twitter.com/Scobleizer/lists/ar-vr-investors

[6] https://virtualrealitytimes.com/2016/11/30/list-of-vr-investment-funds/

[7] http://www.cisco.com/c/en/us/products/conferencing/video-conferencing/index.html

[9] Available at https://x.company/about

[10] http://www.digi-capital.com/news/2015/04/augmentedvirtual-reality-to-hit-150-billion-disrupting-mobile-by-2020/#.WOj_-Pnyvic

[11] Image from http://www.digi-capital.com/news/2015/04/augmentedvirtual-reality-to-hit-150-billion-disrupting-mobile-by-2020/#.WOj_-Pnyvic

[12] “The state of the future”, Dubai p64 available at http://www.stateofthefuture.ae/res/DFF-DFA-StateoftheFuture-EN.pdf accessed March 2017

[13] Source: http://www.internetlivestats.com/internet-users/

[14] http://www.theverge.com/2017/4/5/15191326/htc-vive-anniversary-state-of-vr

[15] http://www.roadtovr.com/tech-industry-heavyweights-debate-the-future-of-vr-at-amd-vr-advisory-council/

[16] Image from http://www.businessinsider.com/virtual-reality-headset-sales-explode-2015-4

[17] http://www.theverge.com/2017/4/5/15191326/htc-vive-anniversary-state-of-vr

[18] http://www.theverge.com/2017/4/5/15191326/htc-vive-anniversary-state-of-vr

[19] http://www.theverge.com/2017/4/5/15191326/htc-vive-anniversary-state-of-vr

[20] http://www.trustedreviews.com/news/the-world-s-first-5g-hologram-call-just-happened-and-the-future-is-officially-here

[21] Olszewski, K., Lim, J., Saito, S., Li, H. 2016. High-Fidelity Facial and Speech Animation for VR HMDs, ACM Transactions on Graphics. 35, 6, Article 221 (November 2016), 14 pages, available at http://dl.acm.org/citation.cfm?doid=2980179.2980252 #

[22] See https://en.wikipedia.org/wiki/Uncanny_valley

[23] https://www.youtube.com/watch?v=1Lv9A2pnnEE&feature=youtu.be

[24] http://www.roadtovr.com/ikinema-project-orion-shows-impressive-motion-capture-just-6-htc-vive-trackers/

[25] Available here: https://www.youtube.com/watch?v=Khoer5DpQkE

[26] https://ikinema.com/orion

[27] Image from https://ikinema.com/orion

[28] https://www.viatechnik.com/resources/50-virtual-reality-technologies-in-architecture-engineering-and-construction/

[29] https://www.viatechnik.com/resources/50-virtual-reality-technologies-in-architecture-engineering-and-construction/

[30] https://cife.stanford.edu/TAC2015

[31] This project was developed by Stanford’s Kincho Law and Renate Fruchter

[32] http://www.dogheadsimulations.com

[36] Doghead simulations’ ruumii, available at http://www.dogheadsimulations.com/

[37] Available at http://bigscreenvr.com/

[38] http://www.eweek.com/cloud/skype-translator-now-works-with-japanese-for-video-and-voice-calls